AWS Database Migration Service: Efficiency at your fingertip

April 4th, 2019

3 min read

By Josh Tibbs

Since the excitement of AWS re:Invent 2018, your author has been experimenting with new ways to leverage AWS services to increase efficiencies, speed processes, and reduce costs. I was recently told of a situation encountered with AWS RDS—relative to a traditional data refresh process—that was in use due to access limitations in the RDS service for Microsoft SQL Server. Although I’m an infrastructure engineer and technologist, I’m certainly not a database administrator. Even so, I just knew that there was some way AWS could be used to make this process work without friction. I found a solution with AWS Database Migration Service (DMS).

Moving beyond the DMS use case

I had been experimenting with the AWS Database Migration Service and knew at its heart and soul, DMS migrated data. The go-to use case for this tool is migrating databases to AWS RDS, but the flexibility it gives the operator opens it up to many more uses. DMS can do heterogenous and homogenous database migrations, to or from the cloud, and convert schemas. It is scalable in that there are various instances types available to power the data migration and conversion. Because it offers continuous replication, it is able to maintain a relatively current data copy, which could be used for up-to-the-minute currency for cutover.

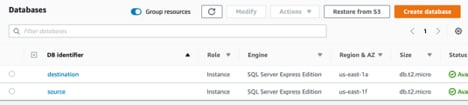

This gave me an idea. What if I used DMS in a non-continuous fashion to affect an on-demand refresh from a “production” database to a “development” database? Using the AWS console, I set up two test databases:

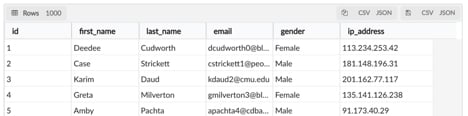

In each of those databases, I loaded 1,000 rows of test data—generated using Mockaroo—into tables. I knew that I would be destroying the data in the “target” database, but this provided me a check to confirm that the data was consistent post-refresh and that the “target” database table was correctly truncated.

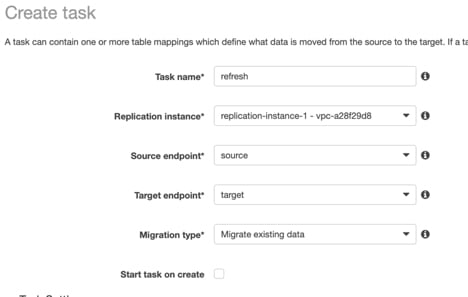

Moving on to DMS, I needed to assemble all the pieces to make replication work. I set up two endpoints, one each to “source” and “target” databases.

Interestingly, I could have easily used an on-premise DB, assuming connectivity was appropriate for the data to be transferred. I created a replication instance. This would provide the horsepower to run the replication task. The replication task itself would tie together the source, target, replication instance, and the rules for the copy. For my data refresh experiment, I took care to uncheck the “Start task on create” box so that my data would remain untouched until I directed a refresh. You can specify data criteria to include or exclude tables and columns under “Table Mappings.”

Once I had everything set up, it was time for the moment of truth. I went to the tasks on the console, selected my task, and clicked the “Start/Resume” button. I waited a moment as my task cycled through to “load complete” and then viewed the task logs. I found a complete copy of my table was performed in about a minute even on my micro RDS and DMS instances. I checked the “target” database and sure enough, my source data was in my target table and row count was spot on. Success!

Integrating AWS IoT

After marveling at the ease of this, I started to think about how I could further leverage the simplicity of the whole thing. At this point, I could click a button in the AWS console and initiate a data refresh. How much easier could it get? Well, what if I could push a physical button? I happened to have an AWS IoT button on my desk. As a demonstration of the possible, I used it to trigger my data refresh. This hardly has a practical use. I can’t imagine an organization with a pile of buttons used to refresh data. But most of this could be applied to any external trigger—maybe an alternate scheduler, maybe via a service management portal, or maybe another process trigger.

This is AWS and everything has an API, so I quickly worked out that I could trigger my data refresh from a Lambda function. With literally 11 lines of code, I built that function:

import json

import boto3

def lambda_handler(event, context):

return None

client = boto3.client('dms')

response = client.start_replication_task(

ReplicationTaskArn='arn:aws:dms:us-east-

1:843888796499:task:5CQ5RULRXOEW67PTY7K7WSLNAT',

StartReplicationTaskType='resume-processing'

)

One quick test run later and that worked, too! I was on a roll! Now, all I had to do was reset and re-provision my IoT button with the app and connect it to my Lambda function. With that done, I pressed the button, waited a moment, and checked the logs again. It worked! I literally had the ability to refresh my database at the push of a button.

That button sits on my desk still today. Sometimes I press it just to remind myself that simple AWS solutions exist. How easy it was to pull together a few services and deliver efficiency without specialized knowledge or costly tools!

Need help migrating to AWS or implementing an innovative idea? ERP Suites is an AWS Partner. We align cloud services with business goals to help you maximize your cloud technology investment. Learn more at erpsuites.com.

Josh Tibbs has modeled leadership in networking, collaboration, architecture and application performance for 25 years. As Director of Technology at ERP Suites, he inspires new learning, critical thinking, and creative problem-solving to shape high-quality, affordable cloud services.

Topics:

.png?width=650&height=325&name=Blog%20Images%202_1%20(5).png)

.png?width=960&height=540&name=Blog%20Images%20(47).png)

.png?width=960&height=540&name=Blog%20Images%20(46).png)