This session, Optimizing AI Costs on OCI, explores strategies for deploying and managing AI workloads on Oracle Cloud Infrastructure (OCI). Charles Anderson from ERP Suites highlights practical approaches to balancing performance with cost efficiency, including leveraging the OCI Cloud Cost Estimator, pay-as-you-go billing, and scaling resources appropriately. Key topics include avoiding overspending through rightsizing, meeting service level objectives, respecting OCI service limits, and aligning AI spend with business goals. The session also covers optimization tactics such as automated start/stop scheduling, tagging, and budgeting, while illustrating cost models for small prototypes to enterprise-scale deployments.

Table of Contents

- Using the Cloud Cost Estimator

- Why Rightsize AI on OCI

- Reducing Opex and Best Practices

- Performance, SLOs, and Service Limits

- Aligning Spend, Tagging, and Budgeting

- Optimization Strategies and Cost Models

- Reference Architectures, Key Takeaways, and Wrap-Up

Transcript

Welcome, Speaker Background, and Session Objectives

Okay. Uh, welcome everyone to the next session of AI week, optimizing AI costs on OCI, right sizing AI for JD Edwards. I'm Charles Anderson. I'm the lead JD cloud engineer at ERP Suites and I've been working with OCI since 2018, uh, pretty much since it released. and I am OCI certified architect associate and a JD Edwards CNC 9.2 certified person if you want to call that. Um

let's see here

like it's just me. Scott was here with me yesterday. Um the objective for this presentation is going to be uh showing u deploying AI solutions on OCI um and the the capabilities that it offers uh for enhancing JDE but really it's it's more than just JDE and a lot of this presentation will apply to any services that you deploy to OCI or really in general to uh any public cloud uh in certain aspects. So, we're going to go over some examples of AI deployments um built on serverless architecture in OCI and integrated with E1, although you can expand that. I I Scott looks like joined there. Um you can expand that to uh include uh nonJE applications or even build a standalone AI application on OCI. Um it's it's it's geared more towards technical users. I I do get into some uh details on the billing and costing aspects um of any service within OCI. Um but I'm not a uh PNOPS person. I don't I don't have a finance background. I'm not an accountant. But uh having worked with JD Edwards for 20 plus years and uh having worked with accountants and and CFOs, etc., after a while, that stuff starts to that stuff starts to uh uh bleed over a little bit. So hopefully this will provide a practical cost framework to help you when you're uh looking at developing or architecting an AI solution on OCI.

Using the Cloud Cost Estimator

So one of the first resources you want to look for um if you have not seen it before is the cloud cost estimator. It's a free website. You don't have to even log into Oracle uh with an account to use the website. It does give you the the ability to um go out and pretty much um estimate the cost of any AI service or nonAI service within OCI.

Um there are configurable drop-down menus. You can you can edit the number of hours per month for instance that you want to operate the system. Say the default for autonomous database is is a full is a full month. You get the cost there. You can edit that and say I only want to run this um during the week. I want to you know give it the weekend off. you can do that. Or if you only want to run it 12 hours a day, the same can be said for the digital assistant.

Excuse me, I'll go back. The digital assistant um you will find that uh a single Oracle digital assistant um is not inexpensive. It's not it's not a trivial cost when you look at running it um 744 hours per month. But if you were to reduce that to 200 or uh you know 300 hours and 400 hours the cost go down goes down significantly because you're only paying for what you use. It's a pay as you go model and um there can be some additional savings there but we'll get into that a little bit later.

Why Rightsize AI on OCI

So the question is why rightsize AI on Oracle cloud infrastructure really why rightsize any service on OCI. Um we have some some slides to go through here. U the the primary points are to reduce your operational expense with pay as you go billing. uh to meet latency and and throughput uh service level objectives to um respect the OCI soft limits or service limits. Those are there for a reason. You do need to uh request to have a service limit increased in certain cases and you have to know about that ahead of time, but we'll get into that a little bit later as well.

Um obviously you want to avoid surprise quota ceilings. Looks like we got a little bit of error there, but no big deal. um and um align your AI spend with your business objectives and KPIs.

Reducing Opex and Best Practices

So reducing um your opex run rate run rate, excuse me, with uh pay as you go billing. Um you want to avoid the commit or lose it risks. Um you if you're not using it, you're still being built for it. That's the bottom line. So, um, that that's that's true for pretty much any service in OCI. If you stop it, in most cases, you're only going to pay for the storage that you've used. So, if you upload a terabyte of data to the system and you're not using the database and you shut it down, you're not going to be build for CPU and memory utilization that can go to some other customer or sit idle, but you won't be build for it if you're if you're actually shutting it down. But, you do get build for that storage.

So, um, oversizing your system obviously leads to direct avoidable cost increases. And that happens a lot because you don't want to have an undersized system. You don't want to have that unexpected uhoh moment of, hey, performance is really bad. So, you you might be tempted to throw more hardware at it than you need, but then that that's going to hit your bottom line at the end of the month. Um, and you you can actually see that throughout the month. It's a it's a near realtime uh meter that you can go out and and and look at in the OCI console. So uh there there shouldn't be any surprises.

Uh especially when you first spin up a service, any service in OCI, you kind of want to know, hey, what's this costing us? uh and you'll see that there's, you know, a dramatic difference between uh a Linux compute instance or VM compared to running a full-on generative AI service, for instance, a compute cluster with multiple GPUs on it. The cost is is is dramatically wildly different between those two data points there. But you can see all of that in the uh cost and billing uh console.

So obviously why it matters to avoid those um cost overruns and uh best practice would be to start with a minimal minimal configuration and scale it up gradually monitor usage with the native cost analytics and tag services for the for the chargebacks that you might have.

Um it there are some uh tagging features in OCI and there's a special cost tag that you can apply to services and that really helps when you're looking at um the cost analysis.

Performance, SLOs, and Service Limits

So the next um slide here meet latency and throughput SLOs's or service level objectives. It it's not just about shrinking what you have. Uh, it's about making sure that it's it's sized appropriately, that you don't have to have those those midnight emergency alerts that, hey, performance is bad or the system is down, it can't function, but also um that you're not sitting there um being build $100,000 a month for something that you only need uh $10,000 a month of service for, for instance.

So, uh it's important to understand OCI service shapes. Um and then also how and this goes back to the the cost estimator website but how Oracle comes up with the billing metrics for each service. It does vary. So how you get charged for um the database services versus a digital assistant um which is a managed service versus um both those autonomous database digital assistant all managed services but but how they meter that is different. And um the number of transactions per second could be different uh depending on whether or not it's a production instance with or a development instance for instance.

Um and you you want to avoid those cold starts um where you know recently recently accessed data is not in memory and performance is low and you see that with with traditional on premises systems. It's not something that's unique to Oracle cloud or or public cloud. It could be any uh system where if you have data that you're not uh keeping in memory and you you shut the system down, you bring it back up, it's slow the first time. And that can happen with with transactions uh where those first API calls could be slow.

Um again respecting those service limits or those soft limits you so you'll find out uh working for the first time with with several OCI services that that the service limit for say a generative AI cluster would be zero. that you're not even allowed to uh build an AI cluster and you unless you ask permission like pretty please can I have a OCI uh generative AI cluster you put in a a service request for that you tell them how many you want and they will in turn usually within within about 24 hours turn that around for you.

But if if you don't have that service limit set uh beforehand and you're in production and you hit that peak time of the month uh and you have to put in a test a ticket to get that, it's not going to be looking good on you if you haven't done your homework on on what the uh the peak uh performance requirements are going to be. So again, avoiding errors is not just about uptime. It's about reflecting uh on yourself and on your department, your IT readiness and do safe fire drills and make you make your business successful. Really, it's not just about, you know, yourself or your department. It's about making the business successful.

So, um how does service limits work? Um you know, obviously they're in there for a reason. It's to protect that shared multi-tenant capacity. So when you're in the public cloud, you're sitting out there, even if it's a serverless architecture, you're still using services that are running on servers that uh could be accessed by other customers at any given time. And you're just sitting out there um running something idle 247. Um that's not very efficient.

So they want to make sure that before you go out and deploy that you actually know that you need it. And that's just a just a soft cap. It's just a way to reduce those those types of um overprovision types of situations. So um if if again if traffic exceeds the default you might get an HTTP 429 too many request error. You don't want to see that especially in a mission critical application.

Um and again you can you can increase those on the OCI console under the governance administration page the limits and quotas usage screen. again in the OCI administration console in the past few weeks they have made some changes to the console so it might look a little bit different um today than it did a few weeks ago but things should be generally in the same place but if it's not search is your friend you should be able to find pretty much anything with the search feature in the OCI console.

Aligning Spend, Tagging, and Budgeting

And then lastly to align your AI spend with with business value so um you can provide real-time cost visibility and we've had to do that internally at ERP Suites as Well, hey, how are we doing uh with AI spend? So, we have AI projects and you know, we have OCI services spun up uh some of them running 24/7 and you know what's that doing to our bottom line and being able to go out there in the console and and provide a real-time feedback to the finance person and say, "Hey, this is what we're spending in this area."

And then it's it's good to u compartmentalize things, tag things, compartmentalize things for that chargeback if you have to deal with that. Uh maybe you just have one big budget and and you're just looking at, hey, how can we reduce costs? But you might have uh a shared services model where you're charging back to departments based on their percentage of utilization. And the tagging becomes really key in that type of a scenario. So it's a good feature to have and be aware of and implement. It doesn't get turned on by default. you have to go in there and opt into those those types of things.

So some optimization strategies that you can deploy with OCI, the auto start shutdown, uh, is not an automatic, uh, feature that you get. You have to go out and develop it. Um, that's something that an ERP suites could help you with. You could go out and do some research on your own. You might use your favorite AI assistant to to do some research on that. But the bottom line is you need to deploy uh OCI functions and events if you want to implement something like that where it's where it's starting and stopping on uh demand or or by by your schedule basically. So if you want to make sure that systems up by 5 a.m. then you can do that. Otherwise you're going to be build for it you know 247 as I mentioned before.

um you know flexible shape scaling GPU countdown after training that would only apply if you have a um genai cluster most I think most of the JD average customers that we would talk to probably aren't ready for that and if you're not even in using AI yet you're probably u not beyond a small scoping effort u proof of concept prototype environment in those cases you really want to be looking at serverless architecture ures and not looking at dedicated clusters.

Optimization Strategies and Cost Models

That's something that confused even us when we started uh looking at this a year or two ago was just hey what do we need to be able to um start developing on the system and do we need a production cluster or not? Uh do we need to be training our own data? Can we do some of this with data warehouse and machine learning and do we actually need dedicated GPUs? And it turns out, you know, hey, with what we're doing, uh, with the project that we've been working on and the proof of concepts that we've been putting together for customers, uh, we actually don't need that, right?

So, over time, it's it's just a matter of every every customer, ourselves included, needs to look at their own business requirements and determine, is this actually needed before we go out and request a service limit increase and go out and deploy that service. Because otherwise, you deploy that service, you might set it and forget it. it's just going to be sitting there running and no one's actually using it. You see that show up in your your cost analysis. You see that in in your bottom line every month. Hey, why is our operational expense so high and no one knows but um you know you might you might call back and say hey oh yeah that's that AI service we need that but in reality you might not even need that much you might be overprovisioned etc. So it's really really good to take a hard look at that.

Um again you those tags you can tag by environment you say hey this is a dev test or prod system. uh for your compartmental budgets. Um budgets, again, that's something that you can apply. Um it's not enabled by default, but you can go in and say, "Hey, I can go out and look at the last three months, for instance, of a uh AI spend or OCI overall OCI spend in a compartment. Okay, that's that's my budget threshold. Okay, now if I go maybe 10 or 20% above that, then I want to get an alert on that." And again, you can implement that with OCI functions.

Um, you can you can basically you can build reports and you can schedule them to run. Um, but you have to go out to the console to get those PDFs when it when it actually runs a report. There's no built-in solution. And this is not unique to OCI. AWS, I believe, has the same issue where you have to build a Lambda function if you want to email PDF to your inbox from the billing console. So, just be aware of that. And I think some of that is just uh OCI uh hasn't been around as long as AWS and a lot of these features and functions are um kind of mirroring what AWS has. And it's just to me it's humorous that that that type of functionality isn't included and that it’s kind of mirroring what AWS does. So not a not a knock on on Oracle or OCI at all. Just to me that's fine.

Um the here's an OCI cost model overview. So, not everyone, again, if you're just now starting out, you're not even in the prototyping phase yet, you're probably not not going to require a generative AI uh dedicated endpoint, but you might be using a shared endpoint. You you probably don't need a dedicated cluster. You probably don't need a GPU in a u bare metal instance, for instance. Um you you see that $4 per GPU hour that adds up pretty quickly over, you know, the course of a month and a year. So, you really you need to know, hey, do I need to be doing fine-tuning right now? Do I have my model fine-tuned already? Do I need that instance to be running or not?

Um, it's it's it's really key. It's like some things you they're they're ephemeral, right? You only need them for a short period of time. So, once once you're done with it, turn it off and you might reserve the instance. You might save the configuration, keep the data out there if you need it. Um maybe just store a backup, that sort of thing. You don't necessarily need to have that instance running all the time.

Reference Architectures, Key Takeaways, and Wrap-Up

Um, this is a a reference architecture example, not ERP suites reference architecture, but just an example of what what a small or medium or large AI implementation overall might look like, the number of daily tokens and then monthly costs associated with that. And you can see a small prototype environment, you're not going to spend a whole lot of money. This is this does not include like a digital assistant for instance. A digital assistant cost is that's like again that's a managed service. There's a lot more functionality built into that than uh than just a generic chatbot for instance. But um the the the cost of a prototype environment where you have a limited number of tokens they might even give you for free is going to be much much lower than an environment where you need to have a generative AI cluster for instance or you're doing enterprise uh rag the uh augmented retrieval. So um again this is just an example of what that cost might look like on a monthly basis.

um a very small prototype example. So you you could implement a uh OCI function just a bit of code sitting out there right um that that could be running in Kubernetes for instance that could call a genai shared endpoint and you could have your data stored in object storage you don't necessarily need to have it stored in a dedicated database um and then you could have the OCI API gateway securing inbet access for that and the the first say for instance OCI functions at 2 million invoke locations are are recorded as free. This is this is current as of April 2020. Um so Gai tokens, etc. So the cost is pretty low for a really small prototype environment. But once you start getting into more advanced deployments, including the digital assistant, the cost isn't going to go it's going to go up dramatically from there.

And this is an example. Um, this is not a quote um or commitment or or or contract, but it's an example of of what a digital assistant deployment might look like with uh address book and supplier master, customer master, who's address book, trigger UB, and the benefits again that they're listed there. You can read them, but to drive efficiency, reduce cost, automate tasks, etc. there there would be a onetime setup fee for that and you're going to have you're going to have cost to develop and you might capitalize that and you're going to have the operational expense the recurring um uh operational expense that you're going to pay directly to Oracle for that. We're not reselling OCI services. So um you know the the cost for a digital assistant is going to be you know a lot higher than if you were to implement a small document understanding deployment. But um once you start looking at okay what does our business need um that that that cost can grow exponentially.

So um you know you you might look at hey we have certain amount of of uh documents that we can scan up to a limit and those are free but you know that might get you through two days of the month. So that's when that that cost uh starts to kick in. Um I think I skipped over the machine learning aspect but um the if you have um automate the autonomous data warehouse or autonomous transaction pro processing system you could implement machine learning within that instance especially if you have the license included option um for the enterprise edition that you can do machine learning in the database that gives you AI features um and the cost is listed there.

So key takeaways for this um right size first. You always want to try to match the model size, throughput and SLA to the business need because you don't you don't want to have unexpected surprises. You don't want to wake up and you know have a a nasty gram in your inbox or a phone call from your boss wondering why uh you you've blown the budget on day two of the month.

um you know move from a shared uh serverless architecture to a dedicated resource. Um you know start small then grow into what you need and and only only deploy that when you know you need it. Don't just guess. You need to be looking at the bottom line. you need to be looking at the number of transactions that you estimate you're going to be processing and hey can we actually reasonably meet our SLAs's uh with a shared system or do we need dedicated hardware for that and and maybe there's a security concern as well it doesn't necessarily have to be a performance concern it could be a security concern those can sometimes go hand inand

um automate savings again that's that's really key it's not a feature that's enabled by default you can't just go in there and click the easy button and you might have to develop a function to do that. But if you put the time in, you can see up to a 40 to 60% lower monthly bill. Um, that's something that I think even even ERP suites ought to be looking at doing in certain cases and say, "Hey, why do we have these resources running 24 hours a day? Maybe they only need to be running 12 hours a day and maybe we don't need to run this on the weekend unless we've got project that's got a deadline coming up." That sort of thing. You you can you can reap the rewards if you actually put the time. So there is some initial development cost, but once you have that code written, it's it's pretty much set and forget, right? Unless there's a API change, that that stuff probably should just keep it continually running.

Um, and then again, implement tagging and tracking. Ma, make sure you're compartmentalizing within OCI, not putting everything in the root compartment. Make a compartment for your uh various divisions if you need to do that. and put tags on resources and and use the cost tracking tags um definitely will will help there with the PHOPS dashboards and train your your end users as well um to be able to go out there and do self-service in that system. Um you you don't you don't necessarily want to depend on an IT person to always be there to to uh provide that information. if you can train that finance person to go out and and look at those those reports themselves or or if you want to put the time in and like I said develop a function to have that report emailed to them even better then you shouldn't have to uh worry about those phone calls.

um leverage incentives so I'm not going to give you a commitment here but if you talk to your Oracle sales rep and you start looking at universal credit commitments you might see a a non-trivial or substantial rebate opportunity there um obviously See there there's always a downside to a commitment. You're committing to spend a certain amount and if you don't you know use it you're still spending that right? So the pay as you go model can benefit you there but you can get a discount if so it's really it's a black art sometimes of determining hey what have we done in the past you know what's what's next year what's the next two three years look like uh maybe we can save some money if we're going to stick on this platform.

So that's uh what I have. It looks like we we did a good uh 30 minute presentation here. If you have any questions, I don't see any any uh comments in the the session chat here, but um I've done a lot of talking, so you take a break. I'll I'll cover for you for a little bit.

Yeah, that was really interesting, Charles. Thank you. Um, I hadn't seen some of those costs. So, good to see you actually talking some numbers. Anybody have any questions about that? Let's drop them in the chat and we'll see if we can answer them.

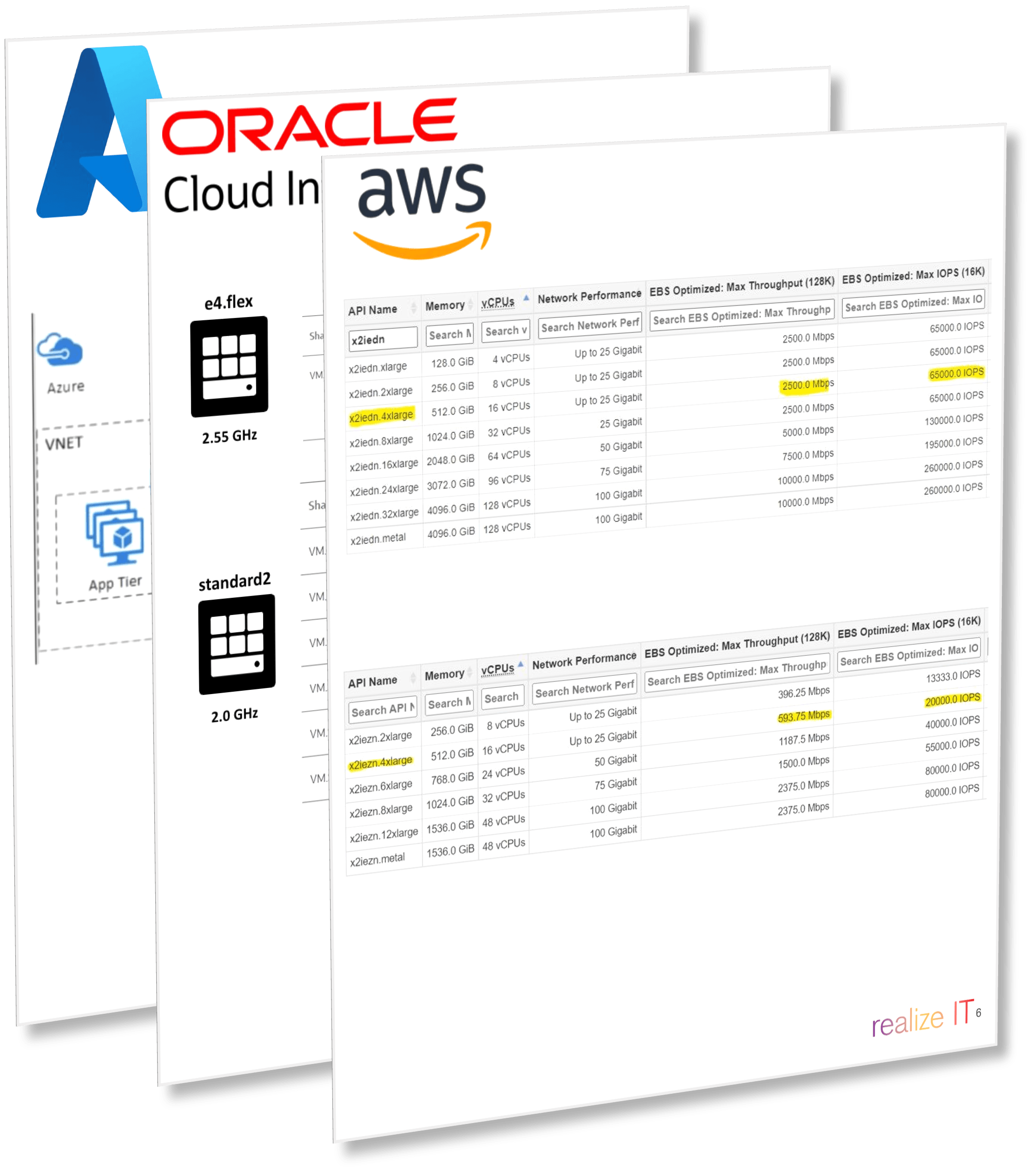

Uh, yeah, I know the the one with the yellow t or the yellow background. I was thinking I have to go in there and fix that for for downloads so we can see it easier. Yeah, I mean, and there's some of that um I wanted to include just to give people an idea of of of what they're looking at, but it's going to vary based on their business needs, right? Every every solution should be tailored to what you need. I can't stress that enough. It's really hard to have a cookie cutter uh price on some of these things, especially with you're talking about document understanding because, you know, you might be doing receipt processing, you might be doing sales order uh processing, and they're totally different.

All right. Yeah. Well, I hope this was helpful and uh if it wasn't, uh promise to do better next time. You can leave a bad review for Charles and That's right. All reviews are are uh bring it up to him every day. Helpful in one way or another. We can learn from our

All right. Well, um let me check the calendar here. We've got um Mo should be wrapping up his as well. So, we'll have a break and then we have two sessions starting at what is that 3:30. Sean for security and then we have Jason from Oracle. Um he's given an a longer hourlong session on uh Oracle code assist. That's right. Um so that should be nice and entertaining. And then if you're security, we'll have Sean. And then we added this a day or two ago, but the live customer panel. So if you haven't had a chance to see it, if you already set your schedule, that is now on there. That's our last session at um 4:30. So we'll end with a half hour session. We've got a couple customers coming on. Manuel Ward from Oracle will be there um help ask some questions and um see if if uh they are thinking what you all are thinking.

All right, sounds good. We'll wrap it up here. Take a break and then come back here in about a half hour. Thanks, Scott. Thank you everyone for attending.

Video Strategist at ERP Suites

Topics:

.png?width=650&height=325&name=Blog%20Images%202_1%20(5).png)

.png?width=960&height=540&name=Blog%20Images%20(48).png)

.png?width=960&height=540&name=Blog%20Images%20(47).png)

.png?width=960&height=540&name=Blog%20Images%20(43).png)