Processor licensing can be confusing if you don't understand the resources required to run your database. Our cloud experts break down the details so you can avoid any misdirection and optimize your licenses. We'll also share how to determine your resource requirements so you don't waste money on Oracle processor licenses no matter what cloud platform you choose.

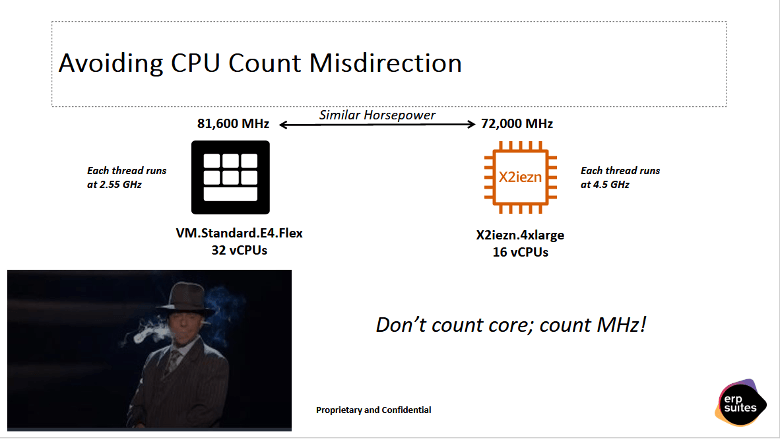

Avoiding CPU Count Misdirection

When customers set out to design a cloud architecture, they usually focus on the number of vCPUs they think they’ll need for optimal performance. While this is certainly an important consideration, the clock speeds those vCPUs will be running at are of equal importance. Consider the following diagram of possible system architectures. On the left, I have OCI with 32 vCPUs running at 2.55GHz. On the right, I have AWS, and I’m using an instance called X2iezn with 16 vCPUs running at 4.55GHz. As you can see, if I just do the math, I have just under 82,000 MHz in aggregate on the OCI side; whereas, on the AWS side, even though I’m running half the number of vCPUs, I’m still very close in terms of aggregate horsepower, coming in at 72,000MHz.

Bottom Line: When you’re considering possible system configurations, don’t focus exclusively on core counts, but multiply core counts by clock speeds and use the net results as your basis for comparison.

How Much CPU Are You Really Using?

In addition to considering how much aggregate horsepower your system can bring to bear, you’ll also need to do some analysis of how efficiently you’re using that processing power. For instance, let’s say you have 32 vCPUs that you’ve allocated for your on-premises system. Are you actually using them all? And if you’re not using them all, what should you really be optimizing for? Asking questions such as these is not only a key factor in potentially enhancing your performance and productivity, but it can also end up saving you a bundle in the long run.

Paul’s Law of Application Performance

When dealing with application performance issues, we’ve found that 90% of the time, the problem is bottlenecking on the database; and 90% of performance bottlenecking on the database is disk I/O. Occasionally, we’ll find some CPU bottlenecking, but in the vast majority of cases the problem ends up being disk I/O.

So, how can you mitigate that disk bottleneck? There are several potential solutions:

- More RAM. This is my personal favorite, at least as far as bottlenecks go. The more RAM you give the server, the more SGA you have, and the more queries being serviced out of SGA.

- Faster disk I/O. When it comes to disk I/O, there’s no such thing as “too fast.”

- Database Compression: Compressing the data doesn’t just mean compressing it on disk, but also in SGA. This means that you’re storing a lot more data in memory, and it’s more likely that your queries are being handled out of RAM as opposed to performing costly disk I/Os.

Using this approach as a framework, and always thinking about the kind of bottlenecking we’re optimizing for, let’s look at options on the various clouds.

OCI

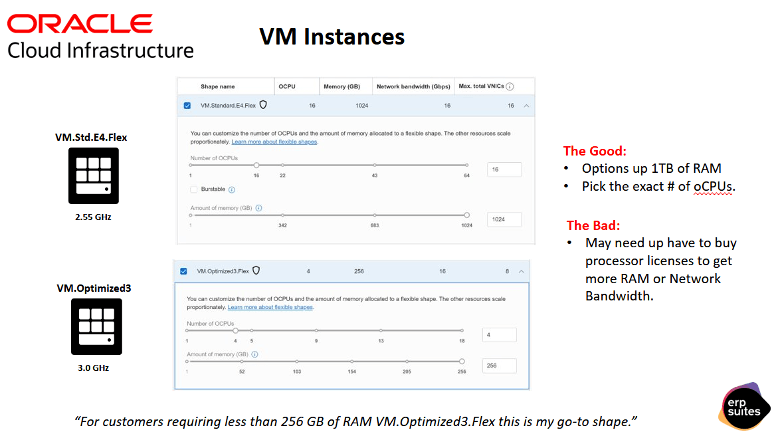

Let’s start by looking at VM instances. This is where you’re going to stand up an instance and then install Oracle on top of it, as opposed to database as a service (DBaaS). This is not fundamentally different than on-premises systems you may have where you’re running Oracle on top of a VM instance, perhaps using Oracle VM or Oracle KVM to do it.

There are two different flavors you can use in this approach: VM standard and E4.Flex. The really cool thing about this is you’ve got the ability to set the number of OCPUs (remember 1 OCPU = 2 vCPUs), and you can set this independently from RAM. This looks really good if you’re wanting to maximize RAM and minimize the number of CPUs, but there are a few things to keep in mind:

- You have to keep a 1:64 ratio between the OCPUs that you allocate and the amount of RAM. So, if I had 1 OCPU and I wanted to go above 64GB of RAM, I would need to add another OCPU to get that additional RAM.

- The actual network I/O is throttled proportional to the amount of RAM being allocated. For these VM StandardE4.Flex shapes, you’re getting 1Gb per second thru-put for that network I/O per OCPU. If you want to increase that, again, you’ve got to allocate more OCPUs, and this in turn means that you’ll need additional Oracle licensing support.

One other important consideration to note with OCI is network bandwidth. You’ll be connecting over iSCSI, meaning that your disk activity as well as your traditional network loads will all be riding that 1Gb per-second OCPU.

That’s the StandardE4.Flex in a nutshell, and if I can I try to avoid using this for a database. What I go with is the Optimized3.Flex. Why? First of all, this is running at a faster clock speed: 3.0GHz as opposed to 2.5GHz with the E4. More importantly, though, the Optimized3.Flex gives me 4.0Gb per second per OCPU, as opposed to the 1Gb per second I was getting with E4: a much better performance characteristic. The only downside here is that it’s limited to 256GB of RAM. If you need more than this, you’re either going to have to go BareMetal or go with the StandardE4.

Again, I stress that it’s very important to understand what you’re optimizing for. Does it make more sense to have more network bandwidth available and have a smaller SGA, or would you prefer to sacrifice some network bandwidth but have a much larger SGA available to you (all the way up to 1TB)?

For customers requiring less than 256GB of optimized RAM, VMOptimized3.Flex is my go-to shape. It offers options up to 256GB, and I love the fact that I can grow this in single OCPU increments. With a lot of clouds (AWS, OCI), if I want to scale the processors up (let’s say I truly do have a CPU bottleneck and I’m already running in a scenario where I have 8 vCPUs, and I’m just hitting this limit and I need to go a little above it), my only choice is to double my number of OCPUs. The fact that Oracle can do this in single-processor increments is really cool, but only if your bottleneck really is CPU. If it’s RAM or disk I/O, then you really need to dig into the numbers and understand what’s going on under the surface. The biggest drawback with this scenario is that I’m in a situation where I may have to buy more processor licensing even though my CPUs are not being fully utilized. The reason for this is that the network and the total amount of RAM I can get are tied back to those OCPU allocations.

Let’s dig a little deeper into this with some examples of possible system configurations.

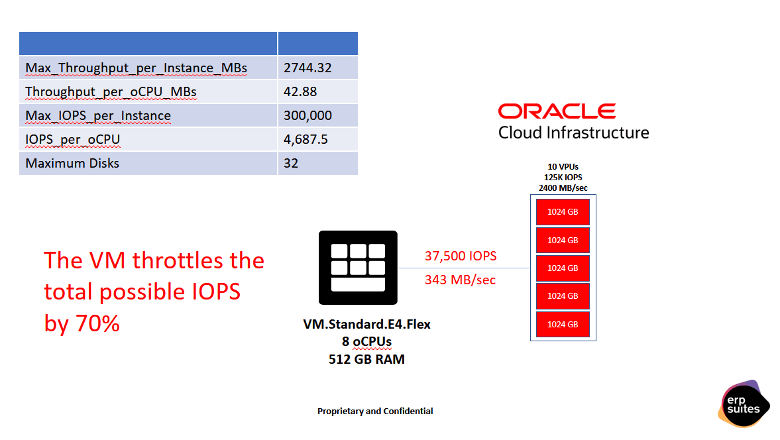

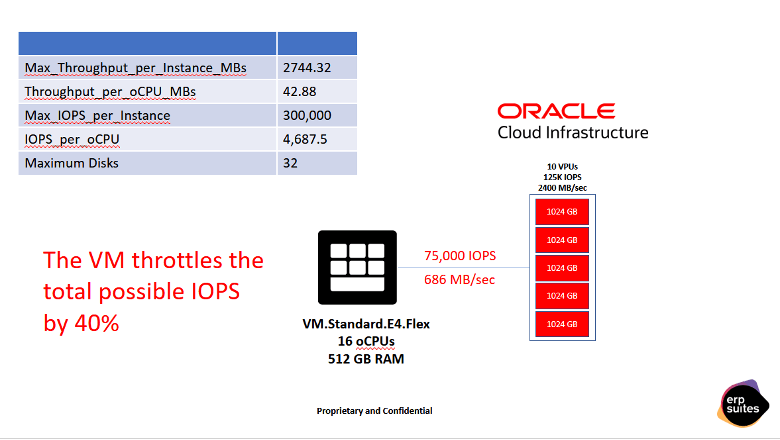

First, let’s look at using StandardE4.Flex, where 1Gb per second per OCPU is allocated. In this scenario, we’ll be using 8 OCPUs, and we’ve architected the storage so that it’s able to deliver 125K IOPS. This sounds great at first, but because 70% of the total IOPS are being bottlenecked by throttling on the OCPUs, we’re only able to take advantage of 37,500 IOPS. What we have to do to fix this is increase the number of OCPUs. So, we scale up from 8 OCPUs to 16 OCPUs. Now, we’re up to 75K IOPS. We could scale up the OCPUs further and increase that thru-put, but every time we scale up we also have to purchase another Oracle license. Now, the reason we went for the E4.Flex shape here is because we need 512GB of RAM. If this were below 250GB, it would be a very different calculus because the Optimized3.Flex would be giving us 4Gb per second per OCPU allocated.

On AWS, whenever we’re talking about Oracle databases and Oracle database licensing, there’s one instance family you need to be aware of: the X2. This is an instance family that AWS specifically created to be optimized for Oracle workloads. Within the X2 family, there are two different subsets: X2iezn and X2iedn. With the X2iezn, you get faster clock speeds; and with the X2iedn, you get more IOPS. Once again, we’re back to asking: What are you optimizing for?

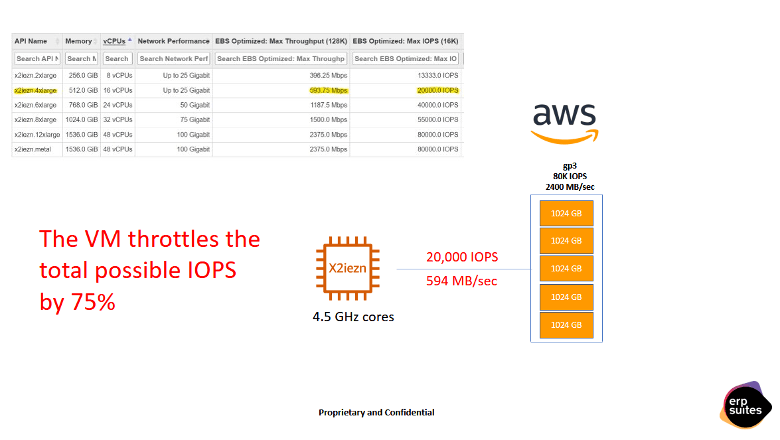

Let’s look at an example with the X2iezn, where clock speeds are running at 4GHz. These are among the fastest speeds you’re going to find on the cloud! In the scenario I’m depicting here, however, you’re going to end up with your IOPS getting throttled. I’m engineering the store in such a way that I’m getting up to 80K IOPS, but because of the amount of EBS bandwidth I have available on this instance family, I’m only able to consume 20K IOPS. This is painful because there’s no such thing as too fast when it comes to disk.

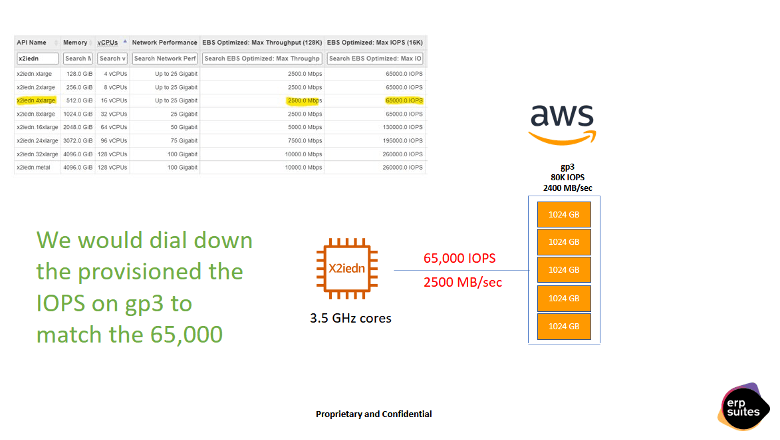

The second example features the X2iedn, which is running at slower clock speeds, so we’re going from 4.5GHz to 3.5GHz. Again, we’ve got the 80K IOPS here, but now we’re able to process fully 65K of that potential.

Bottom line: If we did a detailed analysis and found that CPU was our primary system bottleneck, I’d probably recommend going with the X2iezn. On the other hand, if we found out we were bottlenecking on IOPS, I’d recommend going with the X2iedn.

DBaaS Options

So far, we’ve been talking about provisioning VMs and the best way to go about doing that. Now, moving on to the database front, AWS and OCI both have DBaaS options available for us. On OCI it’s called Database Cloud Service, and on AWS it’s called Relational Database Service (RDS).

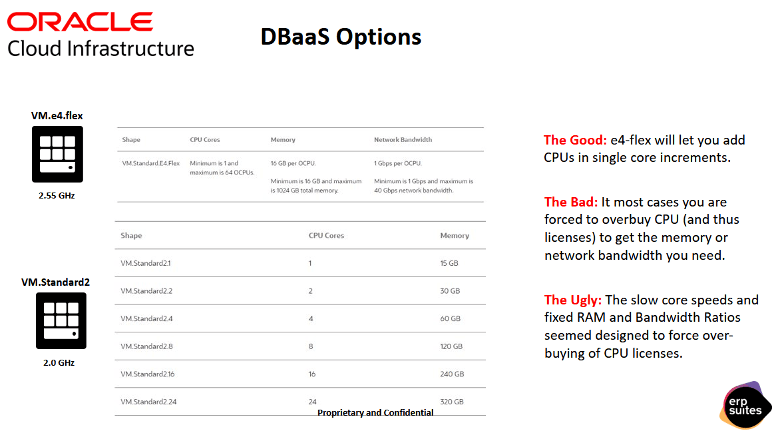

OCI DBaaS

With OCI, the main thing I want to emphasize is the fixed ratio between RAM and OCPU (this will become a reoccurring theme with the Oracle solution). Anytime you want to allocate more memory, you must allocate more CPUs; and when you add more CPUs, this increases the license cost. So, what about the E4.Flex shape we were looking at before, where it let us do everything independently? The good news is that it’s available for OCI on their DBCS option, but the bad news is that it enforces that 1 OCPU to 16GB ratio, meaning that, as you need to scale up RAM and network I/O, you’re going to be paying for additional Oracle licensing even though you don’t need it.

The good news here is that the E4.Flex shape lets you scale in those single increments I discussed, and that’s really important if it turns out that CPU is your bottleneck. The bad news is that, in most cases, you’re going to end up overbuying CPU, and thus more Oracle licenses, just to get the memory and network bandwidth you need. You’ll end up seeing your CPUs sitting at 8-10% utilization. The ugly news here is that slow core speeds and fixed RAM and bandwidth ratios will force you into buying Oracle processor licenses.

Oracle has another option called Exadata, where the options are completely flipped. It’s awesome. A quarter rack of Exadata will run you around $10,000 a month, so it’s not inexpensive, and you always have to have a minimum of 4 OCPUs (2 OCPUs per node), but the advantage here is that you can do per-second billing and you can scale CPUs up and down as needed. You’re getting that entire 768GB of RAM immediately, regardless of the number of OCPUs, and you’re literally pushing millions of IOPS. If you’re on the higher end of things and you’re wanting to look at OCI, Exadata is a phenomenal option. On the lower end with the VM side, just be aware of those OCPU-to-RAM ratios, as they can force you into buying more processor licensing than you really need.

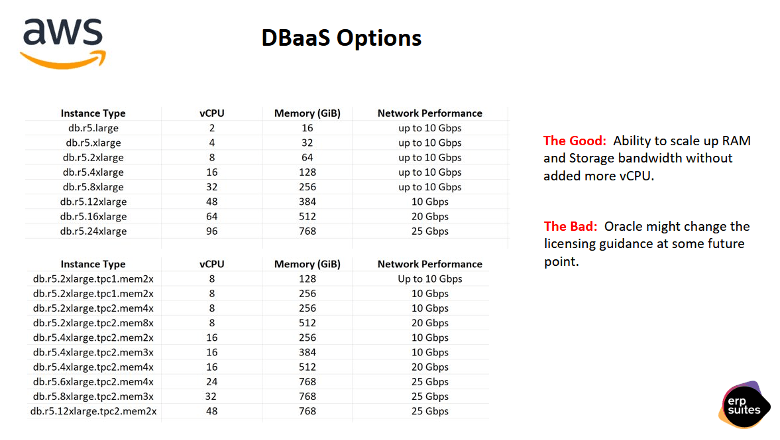

AWS DbaaS

With AWS, you have the db.r5 family, and you can see how the vCPU amounts are scaling, from 2 to 96 (and remember every 2 vCPUs requires an Oracle license). In this example, I’m back to the same problem I was having with OCI, where I’ve got 64GB of RAM, so I’m running on the r5.2xlarge instance. I’ve got very low CPU utilization, and I really need more RAM, so when I go to 128 RAM, I’m forced into a situation where I’m doubling the number of CPU licenses that I need.

AWS recently came out with a new option, which is .memx. Let’s take the example of the r5.2xlarge again here (this is the same thing as what we were looking at before, the exact same number of CPUs), but now let’s say the memory just doubled from 64GB to 128GB and we get that all the way to an 8x. So, you can literally keep the number of CPUs the same and get all the way up to 512GB of RAM. These are some awesome options! Unfortunately, you can’t get them on the EC2 side. You can only get this extra memory option using RDS. Generally, I’m not a fan of RDS, but prefer doing this directly on EC2; however, the economic impact of this is enough to make me change my mind.

Bottom Line: The ability to scale up RAM and storage bandwidth without adding more vCPUs is amazing, but Oracle might change the licensing guidance at some point in the future. For the moment, this is a great option, but it may not be around forever.

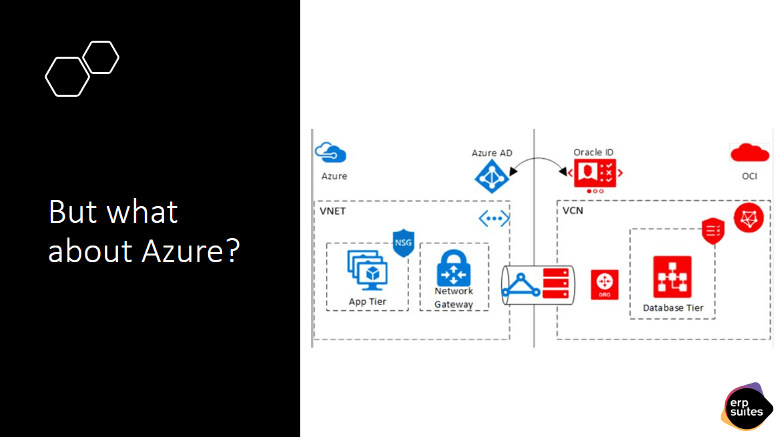

What About Azure?

With Azure, you can absolutely provision a VM and install it, although the clock speeds will not be as fast and you won’t have as many IOPS as with either OCI or AWS. Azure’s strategy has been to partner with OCI directly in instances where they both have systems physically present in the same building (literally with a cable thrown up over the wall between the two). With this configuration, you’re able to ride over that local bandwidth and provision a database at OCI while your application tier is running at Azure. That said, unless you’re running a modern application and you’ve got all kinds of resiliency you can build into it, I wouldn’t do this. Most of the time, when we’re talking Oracle databases, we’re talking traditional Enterprise applications, and those are known for hard dependencies. So, in this scenario, you’re basically doubling the chances of something going wrong and affecting you, as an outage could take place on either side (OCI or Azure).

Bottom Line: If you’re an Azure shop and you need to go with Azure, I would not recommend this option. I would recommend just provisioning the server in Azure. Find the highest clock speeds you can: whatever is going to give you the most IOPS and go with that. As always, the caveat here is to make sure you understand any potential licensing implications.

Related Issues and Common Questions

Why Prefer EC2 to RDS?

Previously, I mentioned that I prefer EC2 to RDS and that preference really boils down to backend I/O. On RDS, the memory options are phenomenal, but all of RDS is running on an older tier of AWS storage. So, instead of using GP3 and I/O2, you’re actually using GP2 and I/O1. For GP2, which is the lower cost storage, I’m going to get 3 IOPS per 1GB allocated, whereas, with GP3, I get 3K IOPS right off the bat per volume that’s allocated. I can also add an additional 12K of provisioned IOPS. In my opinion, memory covers a multitude of sins, and you’re not going to find a better CPU-to-RAM ratio anywhere on public cloud today.

Which Family is Best in Azure?

Look for an instance family with massive amounts of NVMe storage. You can also get a close approximation to this by provisioning two servers, side by side, and setting up negative affinity between them. You’ll also want to make sure you’ve got them spaced out as much as you can within the same availability zone. Then you put the database on NVMe and use maximum availability architecture to configure DataGuard so that, whenever something is written to one server, it’s guaranteed to be written to the other. This way, you can approximate some of that performance at a potentially much lower cost footprint, depending on your specific scenarios.

If you’re an Azure shop, another possibility you’ll want to consider here is whether you’re in a region that’s already paired with OCI and offers that Oracle/Exadata CS option mentioned previously. I know I said this was a horrible idea, and maybe it still is, but I would look at it anyway because of that per-second billing they do on the OCPUs, as well as the ability to dynamically scale things up and down. When you figure up the total cost of all of your Oracle licenses, plus the infrastructure cost on the Azure side, versus doing the same thing with Exadata on the OCI side, you might find that the Exadata option is a lot cheaper. I also recommend you do some analysis around your database and see if it could benefit from advanced compression because it’s also something else in NVMe that helps to mitigate the performance difference you’re going to get between the two.

With Azure, Which CPU Shape Should We Choose?

My general go-to with Azure Oracle databases is the Standard148sv2 with 12 vCPUs and 252GB of RAM.

What About Exadata to Azure?

At the risk of sounding like a broken record, you have to really understand your workloads and what type of bottlenecks you have, because, depending on what version of Exadata you’re running on, it’s either using InfiniBand or rocky interconnects between the database nodes and the storage nodes. So, instead of everything happening in milliseconds, it’s happening in microseconds. The boxes over that converged InfiniBand or rocky interconnects literally have the ability to access memory on the other box just because everything is happening so fast. Because of this, you’re able to drive millions and millions of IOPS off of the Exadata. You have to understand how many IOPS you’re using. Typically, I would do a detailed study and then ask: What is my average amount of IOPS? What are the peaks? What are the high-water marks? What is driving those high-water marks? A lot of times, you’ll see a spike where, all of a sudden, you’re using 200K IOPS; and then, once you did into it, you’ll find that it’s your RMAN backups, or you’re doing something with archive backups. In the cloud, there are other ways to back things up, so those peaks might not matter much for you; but understand what your average IOPS requirement is, and understand what those peaks look like, so you can explain those peaks.

Advanced Optimization Techniques for Licensing

Finally, let’s go back to the scenario we discussed where we’ve got the box 16 vCPUs, and the only reason we’ve got that many vCPUs is because we need 256GB of RAM. If we do the math, we can see exactly how this is netting out. Even assuming we got a 50% discount on the Oracle list price, the numbers just look ugly. One thing you can do to make those numbers look better, however (assuming you really do need fewer CPUs), is to use advanced compression. For J.D. Edwards in specific, if you use the advanced compression feature, you’re going to get anywhere from 60-70% compression, and the really cool thing is that the compression is not just on disk; it’s also compressed on RAM. As a result, you’re going to be storing 60-70% more data in SGA because the data is so much denser. Admittedly, you’re going to run your CPUs marginally hotter because of that, but you’re also going to cut the amount of RAM you need in half; and in this particular case, you’ll also be able to cut the amount of CPU in half. This can be a very powerful way of reducing your overall costs, as the Oracle Enterprise Edition features you need to run on are four times less expensive.

Another cool option here is Oracle KVM. What you would do in this case is to stand up a BareMetal box and use Oracle KVM as your hypervisor. Oracle KVM supports something called hard partitioning, and Oracle has published guidance out there on it in terms of how it complies with Oracle licensing restrictions. By way of example here, you could go out to AWS, get X2iden or X2iezn—don’t just get a VM off of it, get the entire box—and then use Oracle KVM. Say you only want four processors as part of your VM, and you want 2TB of RAM, and that’s going to be your VM. Well, you’re going to get all the RAM, all the EBS bandwidth, and you’re only going to pay for the Oracle licensing that you actually need. That’s a technique you can use on AWS, Azure, or OCI. This is where you’re just bringing your own hypervisor, and this is the real power move in terms of optimizing it because you’ve got the ability to literally say, “This is how much I need, but give me all the rest of the hardware capacity of the box, network I/O, disk I/O, RAM, etc.”

Free Assessment

Migrating Oracle to the cloud can be a really high-risk move if you only look at CPU counts. With Oracle requiring twice as many licenses to run a workload on AWS and Azure, it's easy for businesses to spend more on licensing. You can schedule a free assessment where our cloud experts compare and contrast the performance characteristics of each cloud with a fair representation to identify the best solution for you.

ERP Suites Executive Director, Paul Shearer, has earned a reputation for deep technical knowledge, creativity, and vision. Paul is a familiar face on the JD Edwards conference circuit, often sharing the stage with Oracle to untangle the myths and complexities of cloud migration.

.png?width=650&height=325&name=Blog%20Images%202_1%20(5).png)

.png?width=960&height=540&name=Blog%20Images%20(47).png)