Hybrid vs. Cloud: Where Should JD Edwards and Your AI Actually Live?

December 1st, 2025

28 min read

Watch on Youtube:

Listen on Spotify:

This podcast episode explores the growing strategic decision JD Edwards customers face: whether to keep JDE on-premise while connecting to cloud AI services, or to move the entire ecosystem into a full cloud environment. Through a detailed discussion of security, compliance, performance, cost, scalability, and required internal resources, the conversation breaks down the practical trade-offs of both architectures. Listeners gain clarity on when a hybrid model makes sense for quick wins or regulatory constraints, and when cloud-native becomes the better long-term platform for scalability, faster AI adoption, access to modern tools, and integrated data strategies. The episode concludes with guidance for aligning technology choices with organizational goals, risk tolerance, and innovation readiness.

Table of Contents

- Introduction

- Security & Compliance: Hybrid vs Full Cloud

- Securing Cloud APIs & Compliance-Driven On-Prem Decisions

- Performance, Latency, and Measuring Success

- Scalability, Elasticity, and Cloud Advantage

- Cost Considerations, ROI, and When Hybrid Gets Expensive

- AI Adoption Speed, Resources, and Choosing a Model

- Closing

Transcript

Introduction

Is your JD Edwards system still on Prem, but you're relying on cloud AI to extend its capabilities? Or are you considering or maybe already running a full cloud deployment where JDE, your data, and your AI models all live together? In this episode, we'll uncover the real world trade-offs between hybrid and full cloud JDE, plus AI architectures, what it means for security, performance, cost, and even innovation. Don't walk away with a clearer understanding of which model fits your business and how to chart a practical path forward.

Welcome to not your Grandpa is JD Edwards, the podcast where ERP stability meets enterprise innovation. I'm your host, Nate Bushfield, and today we're tackling A strategic decision that's increasingly urgent for IT leaders and JD customers. Where should your JDE platform live and where should your AI live with it? So you have two options. You keep JD Edwards on Prem and connect to an external cloud AI service, or you move everything, JD data and AI, to the cloud together. Each choice effects security, performance, cost, staffing, and even your pace of innovation.

But with me is Drew Robb, an ad advisor and a long time GD expert and a long time guest of this podcast who's guided clients through both both paths. They'll help us break this down segment by segment so you can make an informed decision and walk away with a road map that fits. Drew, how are you doing today? I'm doing fantastic. Nate, Thanks for thanks for asking. And I'm super excited for this topic today because we do have a lot of customers who really talk about, you know, staying on Prem, like you said, but then using the AI cloud services out there or just moving everything to the cloud, which is a a bigger investment. We'll talk more about that later. But we do have customers in both realms. So it's really important to understand, you know, the limitations of each side as well as why one side's better than the other, but how you can really take both routes of implementation when implementing an AI solution in your business. So thank you, Nate, happy to be here and happy to talk about this great topic. Yeah, I know. I'm very excited about this topic. So let's let's get this rolling.

Are you invested in a Chady Edwards upgrade? So, weren't you seeing the ROI you expected? Could a few overlooked factors be quietly sabotaging your investment? In this episode, we break down the top reasons companies fail to realize their full return from their JDE upgrade. Stick around, you'll learn how to avoid these mistakes and turn your upgrade into a strategic advantage.Security & Compliance: Hybrid vs Full Cloud

Yeah. So what you're saying is when it comes to network data protection and maybe and I identity and access management, that's more of a it's better for the cloud model. Am I right with that?

Yeah, I would agree with that, yeah, yeah. And then the vice versa when it comes to control and verse like simplicity and even the compliance and governance side, maybe an on Prem model is a little bit easier to maintain for a lot of these companies that are out there. I right, yeah, I I would say that's a that's a good way to put it. And it's really about, you know, with clients that are just very, I need to keep a lot of this inside of my 4 walls. I don't want data getting out. I don't want to re architect, you know, it's entire security model with, you know, role based security and, and all that. That's, that's really when it comes into play. And you already have your compliance and audit, you know, and traceability becomes a lot easier as well.

So yeah, I, I completely agree. And that, that that's exactly what, what I'm getting at there for sure around security.

Yeah, of course. But so what's really involved in securing cloud AP is from on Prem systems.

Yeah. And and that's, that's a fantastic question. And it's really around, you know, strong authentication and access control, right. You know, we mentioned a lot with JWTO auth authentication, even single sign on, but being able to authenticate with these pieces along with that rule based security is, is very important, you know, and, and being able to use, you know, a secure connection, right. And I and I talked about AVPN earlier and that's, that's a very secure connection. Being able to use the VPN access these AI services that you want to utilize when you do keep your data on Prem is very important.

And, and again, I, I mentioned it before, it's, it's, it's the encryption as well. You know, data has to be encrypted and encrypted through transit and as well as also at rest. So being able to, you know, be able to encrypt that data you get out to the cloud, cloud based models and, and, and the AI services out there is is also very important and prevalent as well.

And, and I'd say that's the biggest thing is that really secure connection out to these solutions.

Yeah, and that's obviously something that you'll have to plan for and something that a lot of these companies like, yeah, it's it's something that really will come up, especially when it comes to your data, because obviously you don't want anyone to be looking at that. That shouldn't be.

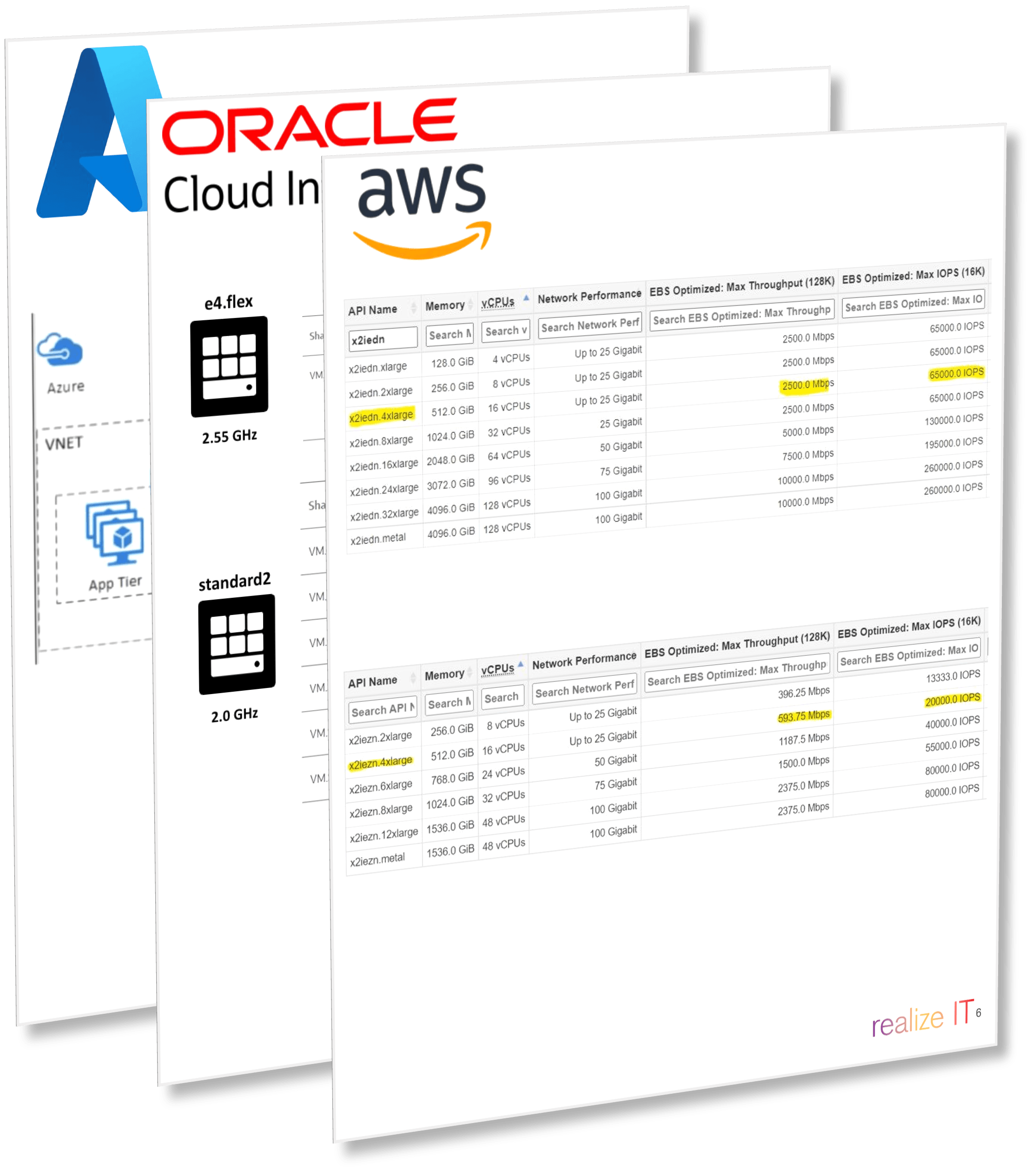

Are cloud platforms like OCI and Azure compliant by defaults or is there oversight still needed with these?

Oh, no, that's and that's a fantastic question. That's yeah, specifically called talking about the cloud model. And they are, you know, are compliant by design, right, You know, and they offer, you know, a certified infrastructure. It's built on strong security. You will have to oversee, you know, configuring the what I mentioned before, the identity and access management, so the security and user policies, So certain users only have access to certain models or certain services. You just only have certain access to certain databases. Even, you know, we'll we'll have access to data and even end user, you know, such as a digital assistant type of end user authentication.

You know, it, it's really the biggest thing. But there is, you know, platforms are compliant. It's all it's all around that identity and assets management that you have in your company.

Yeah, and still sticking with the compliance side. Why should companies or even when should they favor an on Prem solution for these compliance reasons?

Yeah, this is this is a great question to Nate and it's all around, you know, having I'm on a roll some angry questions. Oh, it's so good, yeah. And it's, it's really around what I really mentioned before is, you know, the companies that want to keep everything within their four walls, you know, when they have really sensitive data, whether that be financial healthcare, you can think of it that way. And and they just can't in their right mind move out to the cloud or do any sort of migration like that.

You know, a company really needs to control everything and, and for our requirements, it's, it's important that, you know, we really think about the on Prem solution and they don't really recognize, you know, don't really recognize, you know, specific cloud providers for various services as well. You know, I said, you know, a lot of cloud providers are, you know, prevalent and up to speed with these certifications, but it's really around, you know, do you have a good backbone and security that you don't want to risk losing all that and and kind of re architecting all that in the cloud?

So that that's very important. And I think that's where companies really look, we need to look on plan on Prem for those compliance reasons. Is, is for that reason.

Yeah, there's a lot of sensitive data out there and it's all use case base. Like if you are utilizing specific, like you mentioned healthcare data or specific like that side of the world where you are giving away sensitive data, you're not going to be wanting to just put that out in the cloud in one way shape or form.

Absolutely.

Securing Cloud APIs & Compliance-Driven On-Prem Decisions

Well, yeah. But what are the to move on to the next, What are more of the performance implications of hybrid versus the cloud native AI when working with JD Edwards?

Yeah, and I think we mentioned a little bit about day, day lane, see really before, but it's really about, you know, data must travel from those on Prem services through a secure VPN to this cloud services. A lot of companies have really started to use private containers to really access those AI services and that helps with the security as well. I failed to mention that before, so I do apologize, but that helps with security as well.

But it's right about having that secure connection. But just the the, you know, traveling back and forth that it just really makes the lanes see a lot longer than with the cloud. You're, as I said, you're using a secure VPN. You know, it's really fast data exchange when you're all in the same VPN there BBC, you're all in the same cloud infrastructure, same tendency, same place. It's really easy to connect to different sources and they just moves a lot quicker that way.

And then you really got to look at as well as the, you know, integration, right? You know, if you're really required to have, you know, API date gateways to have secure data connections, whether that be to, you know, a third party database, your JDL was ERP system, Salesforce, other ERP systems, and there's gate gateways again, are, are a little bit more complex, I guess to really build out, you know, where's your datas in the cloud.

You, you can have direct integrations between, you know, JDE and, and, and the AI services out there are other databases that already live on your cloud. So it's really around the connections and the integrations that could also slow down. Like if you really think about it, if you really want real time modeling or real time AI solutions, you know, latency becomes a big thing.

And that's where the cloud can be on the set real time streamlining of data. Whereas, you know, on Prem, it's it's definitely going to hinder that. You may get, you know, every 10 minutes or 15 minutes, you know, and doesn't, it's not conducive for a user to go to wait that long for a solution or for a response to a solution.

So yeah. And the way you put it, it kind of seems like cloud is always better. But is it there are, are there specific cases out there that maybe it's not better for some of these on prime people when it comes to performance and latency?

Yeah, I would say most again with the latency, it's it's kind of tough because everything's right there at your at your disposal and it's very close. But it's really important to understand that, you know, when you when you upgrade to the cloud and you move everything there, you know, and then we can get into this a little bit later, but the services become more readily available.

However, if you want to do a sort of thing like POC testing or moving smaller workloads of data to the cloud, right, and connecting to those AI solutions, you already have, you know, the data intact, it's very clean. It's, you know, it's in your infrastructure, you know, you have the other services built to oh, you have all the infrastructure around it, you know, and you just want to play around with it.

You know, you can really migrate a small data set on the connect to those AI services for that specific use case. Maybe you just want to gain insights on your data or or leverage just a simple financial chatbot. That's really when you know, staying on Prem actually is, is feasible. It's really when you're starting your AI journey, when you're starting to get into it, you know, it's where it was, where the, the on Prem plus cloud or hybrid model makes the most sense.

Performance, Latency, and Measuring Success

Yeah. And what like how would you really measure success in performance here?

Yeah, and again, and, and I talked about earlier and it's really going to depend on that, that specific use case. So you're trying to provide, you know, value to your business, you know, are you using those large workloads and that real time data that I talked about with the cloud approach? Are you, are you trying to build out, you know, an AI solution or an AI platform or, or road map really that that can take, you know, you know, more than six months, you may be a year, two years.

Or are you trying to do the hybrid approach where you just want to do a quick win or a simpler task, right? And you're not ready to leverage all the capabilities of, you know, the cloud architecture and not even, you know, have that project of moving everything to the cloud as well, because you got to think that's a project within itself to move to the cloud.

So it's really with getting started, getting their simple workloads figured out, getting those simple process improvements for AI is, is really the reason why you want, you want to choose a hybrid model over the cloud, right. Real time, larger workloads for the cloud, losing a lot more services. And then hybrid, there's quick win solutions is a good way to think about it.

And we're all about quick wins here at your view suite.

Yeah, but yeah, All right. So we talked about a lot of the security side and the performance side, but what about the scalability and flexibility, which architecture better supports like a long term scalability, especially if AI adoption truly gross like we believe it will?

Yeah. And that's, that's a fantastic question, Nate. And, and really, you know, with the with the cloud or sorry, with the hybrid model, we'll start there first. It's it's really around, you know, if you're going to run these AI solutions, you're going to run these AI models out there, but you want to leverage maybe some hardware GP us to run it in house.

You know, that's really around, you know, that really gets expensive. Let's just say that, right, because you're not able to easily scale up or scale down your infrastructure and how you're able to run these AI solutions or AI models.

Where is the cloud approach? You know, it's very serverless, let's just call it that, right? So you're able to scale up a large language model or solution that you're using an instance that you may be running or running against the model. You can scale up or scale down compared to usability.

If you're trying out, you know, a digital assistant as a service or a document understanding tool as a service, and it maybe doesn't pan out to the solution you the ad solution you want. It's really resources on demand is the best way to do it is to talk about the cloud, right?

And and yeah, just basically end to end architecture as well. Just a really allows you to scale up and scale down on services and allow you to have more compute to run AI model solution services as well. And on Prem you again or on Prem plus cloud or hybrid, you're very limited with that.

Yeah, but how does that, how does it on the cloud like truly look like? Like what, what does elastic scaling in the cloud actually look like day-to-day?

Yeah, yeah, really day-to-day it's it's just about, you know, really automating or, or adjusting those resources you have. And it's specifically around, you know, CPU and memory and how much you would need to, to run any AI solution.

You know, if, if the resource capacity drops or let's say someone's not using a digital assistant as often and there's not as many users, not as many inputs, you know, you were able to elastically scale up or scale down that instance to save cost, right?

It's basically on demand on, on, on how often you're using, using these solutions. And and you know, it's really helpful to understand, you know, when users aren't using the real resource. You know, it helps you kind of keep track of that too, right.

So you can keep track of, you know, there might be peak times or peak seasons when the users are using the specific service or resource. And when can we kind of, you know, it, it will turn off and turn back down. But maybe you can lower the CPU, maybe we can lower the GPUs based on usage.

So it allows you to be more flexible, allow you to have better cost savings around the the infrastructure as a whole. And you're not as pigeon, pigeon holed with that.

Yeah. So what you're saying is obviously the cloud in this flexibility with the scalability, it's a little bit better in terms of what you can actually do if you need to scale up your business, If you need to scale down your business, you are flexible more in the cloud than you are on Prem.

Makes a lot of sense. Then even if we go back a step, it seems like when we're talking about latency, we're talking about performance. It seems like the cloud might be the better choice there just depending on what you're actually utilizing it for.

Yes, if you wanna utilize small amounts of data and it doesn't really matter in terms of like those quick wins that we were talking about. If it, there is just little things and maybe you won't really notice it. But there's still that performance side if you are using larger data sets, if you are utilizing AI in more than just one or two ways.

So it's seeming like the cloud is looking like a better option right now, but we're not even close to unpacking all of this.

Scalability, Elasticity, and Cloud Advantage

So what does it look like in terms of cost for both of these models, not just upfront, but overtime?

Yeah, absolutely. Yeah. I mean, I would say, you know, with the with the hybrid model, the cost, the cost and can get, you know, really you do have the hardware upfront for, for the costing, right. If you, if you really think about that, you do have the maintenance, you have the hardware. If you're going to, you know, apply some more AI modelling the run against that hardware, you might have to buy more.

But in the long run, it's, it's really about, you know, it's, it's, it's a much higher cost on Prem plus the cloud to implement AI services and agents and you know, the current framework, right. Another thing we really want to think about with costs as well is, is since you have this current security framework and you just want to utilize AI models out there, the cost is definitely going to be around, you know, the people and, and managing different people inside of, you know, maybe it's your DBA.

So we have to learn how to structure data or send data out to, you know, these, these AI models or system system administrations to understand how these different models securely connect. We talked about, you know, probably not a podcast, but MCP protocol. So using that for agents, talking to different agents, it's just basically around you need this intellectual property and you have to pay for more people, data scientists as well, you know, solution architects to understand, you know, the different cloud services out there is provides just a gigantic or not gigantic, but a huge cost for for being on friend plus.

The cloud is what you really got to think about. Now on on the cloud, though, what you really want to think about what the cost is. Again, we mentioned the server list. It's it's a lot of pay as you go for doing that. And it's, and you know what the cloud too, it's really easy to experiment as well between the different tools and technologies.

We talk serverless, we talk turning down digital assist if you don't want it, turning down, you know, a document understanding tool. If you don't want it, if you're not liking an LLM turning it off, switch to another one. You know, it really reduces that, you know, that ability really around the cloud. I mean, the people part is you really just spending it on people who understand the services.

So the cloud based services. So it's just, it's just a little bit different there not as much support or security as the on Prem. I I failed to mention that. But you still need people like security to understand how, you know, the infrastructure of the on Prem plus cloud security relates to, you know, connecting to a cloud provider. So that's also big though.

But I, I, I think that would, yeah, I mean, I think for the cost considerations, it's, it's just really around the specific points that I mentioned there.

Yeah. And don't get me wrong, like I'm sure there's still people that are out here that are listening to this podcast right now and saying I'm already on Prem. We already spent that money to be on Prem, which that which that makes a lot of sense. Like, yeah, they already spent that upfront cost.

And so maybe in the short term at least, they won't really see that because they already accounted for it. It was X amount of years ago. Whatever. Yeah. Well, one thing I would mention too with Adney, that's a that's a great point you mentioned too, is that's, that's definitely, you know, from cost would be moving to the cloud, right?

And they've already paid for everything. So they want to stay on Prem. Another thing to really think about too is is you know, with the the movement of agentic AI, not trying to get too far off topic here, but the movement of agentic AI, all these services and solutions are currently changing and changing and changing.

So, you know, companies that are already on Prem might not feel that AI is at a a spot for them to where they can validate going to the cloud and actually leveraging all these different services and solutions out there. So that's that's another thing to really think about why why you stay on Prem, right is, you know, have the ability to do that quick win, try that one thing, do it right, it doesn't work, scale it back.

You still have your entire infrastructure intact, your security and and your data is all still there too. So yeah, it's funny you bring up a Genta Ki where actually that's that'll be the next podcast. It'll be released in two weeks after this.

Awesome. So, so yeah, we're going to dive a little bit deeper into that eventually, but right now that's how we're talking about. So when does hybrid and that's talking on Prem data with an AI agent or AI service on the cloud? When does that become more expensive than maybe what somebody would expect?

Yeah, yeah. And I think what you really want to think about with the with the hybrid model is, you know, maybe and then this is just, you know, hypothetical you you might have duplicate data, right. So let's say your data is on Prem, but you know, you haven't wait and see issues. So you want to move it to the cloud, the data to access, you know, the different servers and different services out there, just as a test, right.

So you you're kind of managing double workload, right, to get that to lower that latency because you have data on friend in an ERP database and you also have data in the cloud. You also really got to think about, you know, when you you have to create these new connections. I've talked about that in the past with, you know, when in the past this, this podcast here it, it's, it's it's having those secure, you know, API calls and connections and then then transits.

And you know, when you have, you know, a large language model that you're using, maybe that's, you know, you know, COD, but you want to replace it with, you know, just just to know Chat GPTII don't want to use here, but just want to replace it with a different model. You have to create a whole new connection to do that right on the hybrid model.

So creating all those new connections securely, making sure that's intact could get a little tedious, could get a little cumbersome as well. And you also got to think to the point too, is, you know, when you do create these servers or when you do connect out to cloud services and use them, and then all of a sudden, you know, you stop using them or the usage goes down.

We talked about this with the cloud model being able to scale up and scale down. It's just sitting out there, right? And you don't have the ability to autonomously scale up and scale down their services. So they may sit idle, you might be wasting money or, and on, you know, something a, a solution. And I've been using in the sense, right.

So that's another thing to really think about as well. But yeah, it really is all around, you know, duplicate data, you know, creating all those different new connections as you want to start to use different AI tools or services, large language miles, brag, digital assistants, all that. And then again, the idle, the idle workloads or idle services out there as well.

Cost Considerations, ROI, and When Hybrid Gets Expensive

But yeah, it really is all around, you know, duplicate data, you know, creating all those different new connections as you want to start to use different AI tools or services, large language miles, brag, digital assistants, all that. And then again, the idle, the idle workloads or idle services out there as well.

Yeah. All right. So obviously we're breaking down a little bit more of the hybrid side, but what about the cloud? Is it ever too expensive to really justify

Yeah, and and we, we've harped on it before and why, you know, a lot of companies still want to stay on print and want to, you know, create there's secure connections. It's it's because of the upfront file cost, right? Do they do companies or even a customers even have the budget or the the move to the cloud?

Is it in their road map? Is it going to be one year, 2 year, three years down the road? And but they're ready to invest in a small AI implementation. That's, that's when it really becomes too expensive because we don't, you know, you don't want to move everything to the cloud. You just want that quick one, as we mentioned before, you know, and another one too is like, if you're continually moving, you know, data sets from different databases or something, right?

You know, it's, it's going to be tough to have everything in the cloud, especially all your different data sources and what not. Some might live on front, you know, having the ability to connect to those different ones and have the budget for everything to be in the cloud really. And you know, that could get very costly as well.

And and really it comes to the fact that we talked on Prem a lot about the security architecture and really the data architecture that's already there and you're already familiar with it. You know, every day to architect all the DB as are familiar with it. You really have to think about it goes with the upfront cost of moving to the cloud.

But we architecting the data, we architecting the security as it will seamlessly get easier as we talked about in the past with security, with identity and access management and really having your data in all one place like a data lake is some a term we like to throw out as well. The upfront cost of really doing all that as well and and recreating all that is, is, is where the cloud does get really expensive.

So it's really all about budget timeline. Is it there? Is it not or are you just really looking to improve, you know, a single AI process? We want to stay in the hybrid model.

Yeah. So, so maybe some of it all up a little bit, it might be cheaper if your infrastructure is already on Prem and it's already in place, there might be a surprise cost from scaling AI 100%. But maybe if everything's already in place, maybe on prem's that right way to go.

But for cloud, yes, higher upfront costs, but to pay as you go, there's lower maintenance. And yes, you might have long term subscriptions and lock in concerns. But after you make that move, you don't really want to go back.

And that's that's very true, especially because of all the reasons that we've listed out here. Now we'll say, and this is something that our CEO says all the time, cost is not the reason that you should move to the cloud. Yes, it can be expensive off the bat. Yes, for XY and Z, maybe you want to stay on Prem for a little bit longer, but cost should not be the main reason that you shouldn't move because there's so much that you can do with the cloud and there's a lot of potential and a lot of ROI opportunities that the cloud unlocks for a lot of these companies.

And yes, there are a lot of reasons to stay and we've laid them out so far.

But to get into a little bit more of maybe a reason to stay or go, which setup allows faster AI adoption and even integration with these new tools?

Yeah. And that's that's a fantastic question. They and really the faster AI adoption and integration with these tools. Absolutely. It has to be, you know, with the with the hybrid model, right? And and then really when you start to catch up, there's connectors. I think I mentioned it before with, you know, a lot, you know, a lot of companies out there are noticing that a lot of customers want to stay on Prem, right?

A lot. I have a lot of their data on Prem, but want to connect the outside sources. It's really about, you know, containerizing those, those AI services in one specific bucket. So we can securely access those via what I said before, authentic authentication pieces, secure AP is encrypting data at rest in transit. I think that's that's really good and really important.

You know what, there's what there's on Prem connectors, you know, it really gives you fast access to, to new services, new language models as well. You know, and, and, and as as we mentioned before, it's, it's really around, you know, new PR CS can be spun up pretty fast and, and really be tested as well.

And, and it just really depends with the spin up and spin down, you know, with the on Prem is, is you create those connections, you had their services and then you can, you know, you can turn them off if, if, if they're in the cloud, But you know, with the cloud base services, you know, it really just depends on how much you want to spend on, on building that solution.

And you can, again, it's serverless, so you can spin it up and spend spin down. But really the on Prem would definitely have the the initial new tools, new integrations, trying new things. And again, you don't have the upfront of moving everything to the cloud as well.

AI Adoption Speed, Resources, and Choosing a Model

So which setup allows faster AI adoption and integration with these new tools?

Yeah, and and it's definitely made this the cloud native approach. And and there's a few, you know, few main points, few examples I can get for that. It's, it's really seamless integration, you know, from your cloud AI data sources to the cloud AI services such as Gen.

AI digital assistants, you know, document understanding different tools we use out there and being being able to leverage those different tools and have those connections be be very seamless, right? Not having a lot of security going into them. You know, you get access with identity management from one service to another service and you're good to go.

You know, it's fast access of really understanding and then connecting to other services such as large language models that are out there, You know, because of because of secure and fast AP is as well integrated with those. That's that's also very important.

And so it allows you the scalability to try large language models that might work for one solution, turn it off if it's not and turn it back on and turn back on another one for a different 1.

So securely quick AP is that are built out in the cloud all on the same virtual private network as well that we talked about earlier with security and allows you to really test, you know, different PRCS, different, different tools out there.

If one thing doesn't work, you can shut it off. Again, we talked about the seamlessness of that and the cost savings behind that. Spin it up, spin it back down, try it. If it doesn't work, try something else because there's just so many different tools at your disposal that you that you might want to use and might want to try out there for different, different ways you're trying to solve your problems in your business.

So definitely the cloud native approach for sure.

Yeah. But are there like we talk about these tools all the time, especially on this podcast, but are there any new AI features that JD users can't easily access and maybe have hybrid model compared to the Yeah, yeah.

And that's, that's good. And you know, when they you, you did mention, you know, vendor lock and before, but it still gives you access to a lot of a lot of good models out there. You know, when, when you really talk though about the hybrid model, you know, you don't have access to the rapidly changing or rapidly evolving models.

So let's say they get better and better. It's not just a quick update. It's a whole new different connection out there. It's a whole different way to connect it via an API token or, or authentication. So you can't just switch easily on and off.

There's there's large language models out there on the cloud and you wouldn't really had access to all the new features such as, you know, autonomous agents.

We've talked to I mentioned before Gentek AI, rag based services, vector database, all those big coin terms you're going to start to learn about as you learn more about AI. It's just really hard to start to connect to those with the hybrid model and, and update and, and, and really update your, your AI stack when you're developing a solution is something to really think about.

It's very 11 dimensional, 1 sided. What you have is what you get kind of stack. What is this one? You can be more dynamic and substitute different services out there as they become more relevant in your solution. So that's definitely something you, you really want to think about too.

And, and also, you know, you really have access to the serverless GPU's clusters that can, you know, highly services can host your data, can run, you know, higher CPU large language models out there and, and other services as well.

And I think it's really important to, to, to also mention that, you know, you can't really access, especially in the JD Edwards space, these AI services without being on tools reuse 9.2 dot 8.2, which allows for secure authentication to these services too.

So that's going back to being able to access these services and the the different loopholes you would not loopholes, but different connections you would have to go through that would be a little bit more challenging here to access the the hybrid model.

So that's the biggest thing. It's all the new and new and new new technology and, and different things you need to access or upgrade to access.

Yeah, so yeah, you would have to upgrade. There's more of a cost on that side of things for maybe it one of these things. And yeah, obviously we talked about cost earlier not being a main factor in one of these things. But yeah, you would have to upgrade to get certain services to get the new GMG ones, to get the better things that are out there right now, which maybe you wouldn't really have to go through when it comes to being plugged into an actual cloud service.

But in terms of an internal resource, right, what internal resources are required to really manage a hybrid model versus maybe a cloud model?

Yeah. And and we mentioned resources, you know, a little bit earlier because I wanted, you know, that was part of the cost. And, and this is where we're going to highlight, you know, who would actually be involved in that hybrid model and, and really why they would be involved, right.

So, you know, you think about the connectivity is the biggest thing from, you know, the hot for the hybrid model, connecting those on Prem data sources to the cloud based services and solutions we talked about out there. So you need a network engineer, you might need multiple network engineers to continue that connection or keep that connection up or switch between different services as you do it, different system admins and security teams, you know, in house to make sure your security infrastructure, you know, well, it doesn't change.

Were you able to, you know, implement AI solutions securely? You know, you really need security teams make sure everything's compliant, right, HIPAA compliant if needed. Just an example, right?

You know, you really think about, you know, CN CS have really managed the JD environment going back to them real quick. As you know, you have CN CS in house and maybe you have a vendor, but you still have to manage your JD overs environment. You're still connecting directly to it, right?

So you track your ERP in your system. So keep me up to date, you know, making sure your all the day is clean, all that sort of stuff. You know, you need, you need people around that, you know, database admins for connectivity.

If you're connecting the other third party data sources, you know, they have office and manage different pipelines as well. And, and again, on the AI side, it's really around, you know, data sciences, you might even need to, so that's very important too.

Just on the on the AI side, you're going to need some people as well who understand that aspect of it.

Whereas in the cloud, you know, it's, it's really around, you still need some AI knowledge, right? But some of these tools become easy to use via GUI, right? Easier to understand. There's good documentation out there, but you just really need data engineers to understand how to move data from one place to another in the cloud.

You might have like a light cloud infrastructure team that understands maybe the identity access management or other pieces of it. And and you know, you might have, you know, a small, as I mentioned before, kind of a small machine learning team who who really understands the tools.

But with, you know, with these cloud providers and having, you know, the ability to connect everything together pretty seamlessly, that becomes pretty light.

So you can see kind of the big difference between what you would need with the hybrid model versus the cloud there as far as internal teams.

Closing

Yeah. So what I'm hearing is maybe having that hybrid model, it might put a little bit more pressure on your internal teams. And that am I right about that?

Yeah, yeah. Because it's really around, you know, you already have those people like secure. Let's let's just take two, for example, maybe the DB as or CNC's or security team, they already have their day-to-day jobs and now you're pulling them in to do more AI initiatives that might be on your road map.

So it just adds to the workload, right, and can really strain their time and resources that they might not have already had before. So you're really asking other people with expertise in house or and I didn't mention before, you would have to hire more, right? Hire more people with those capabilities with that understanding that you may not have in house to to fit those gaps and, and minimize the, you know, the high, high resources or high workload that that her and internal staff may have.

So, yeah, and, and with the cloud model or maybe like maybe you just have a smaller team or smaller company or anything like that, would, would these smaller teams benefit more from going fully cloud or is there a certain window there of Oh yeah, on Prem you can maybe make it work.

Yeah. And it's, it still goes back to the budget though with do we have enough to move on Prem? But let's put that aside. I think it, I think it does because of what we really talked about before with leveraging different cloud services in the scalability and turning off and turning on resources.

It's really about just, you know, having a good cloud team as well as, you know, a small AI team that understand those services when you're out there and being able to seamlessly connect. But you can turn off and turn down, just not as my, you know, internal people would really need to be involved in that.

It's almost like a separate sort of thing because you know, as we mentioned before, your security is really intact, right? You just have to worry about the users accessing different services. So the security is already there. The infrastructure is all in the cloud, you know, and, and, and basically you say you were just going to an AI team in the cloud engineer, if you want to think about that way.

So smaller teams, definitely. So especially if you're building a small team, the the initiatives, but if you're picking other resources from different teams, you know, it, it yeah, it will come to some. So I, I would, I would say I agree with that, Nate.

Yeah. And maybe there's there might be an issue in the beginning with the whole lift and shift, but past that, I can't really see an actual issue if you do have a SWAT team or a smaller company or anything like that. So that makes a lot of sense.

But let's, let's get to the reason why everyone's here. When should a company go hybrid and when should they move everything to the cloud?

Yeah, that's a fantastic question, Nate. And it's really around, you know, the hybrid model is when you've already made a lot of investments on Prem and you got to say on Prem. That's very important. And if we really think about, you know, having strong security and compliance resolution regulations within your business and having to really keep most of the infrastructure within the four walls with the extent of creating those secure connections to go out outside of your four walls and connect to those different AI services out there.

And we also want to think about, you know, do you just want to do a quick win, right? And just an internal chatbot, whether you're you, you know, you, you want to connect to those LL ends out there securely and just have an internal chatbot that your users can use just to get started on your AI journey.

And then lastly, it's really around, do you, do you have the budget to, you know, to be flexible with AI or is it something you're just trying to get started with? So I think that's very important to understand as well.

The cloud model is definitely when you're really looking to scale fast and really reinvent how you want to do business. You, you know, you've taken AI to the full extent and you want to really invest it in, you have a strong budget around that, right?

And I think another thing too, is when you also really looking to, you know, really unify your data with AI and, and understand all that and, and combine it all and, and sort of a data lake where you can easily access those services securely via the, the VPC or VPN inside a cloud.

And it's also, you know, the ability, as we mentioned before, the ability to scale up and scale down. There's AI services as you're trying new ones that you're adding or removing services based on, you know, maybe a, a project you built down AI project, a solution you built out.

You, you need something new or you got rid of something because it's, you know, become obsolete or deprecated and you want to use the new and greater thing. It's easier to do that with the scalability.

And then if you really just want to understand, you know, really up to operational expensive of implementing AI, you have more of a, a budget around that, right? And then you can understand what you actually investing in AI.

And if you don't have money to invest it, then, as we said, you can turn off those AI services as needed. But it's really more of a you understand what you're investing. So yeah.

And honestly, no matter which model you choose, success comes down to one thing. Alignment between your data strategy, your risk profile, and your innovation goals.

If you're not sure where to start, reach out to ERP Suites. They've helped hundreds of JD Edwards customers modernize with confidence. Visit erpsuites.com/AI to learn more or to sign up for our assessment to start your AI journey today.

You can also download our ten week starter guide for free right now. Just look at erpsuites.com/AIbut.

Thanks for listening to that. Your graph is JD Edwards. Drew, huge shout out to you for jumping back onto this podcast. Always love hearing you talk about AI.

But thanks for listening tonight, your grandpa's JD Edwards, and we'll see you next time.

Video Strategist at ERP Suites

Topics:

.png?width=650&height=325&name=Blog%20Images%202_1%20(5).png)

.png?width=960&height=540&name=Blog%20Images%20(47).png)